You need people who can design proper test scenarios, analyze results accurately, and create meaningful follow-up experiments.

Poorly designed experiments might not provide concrete insights into why visitors behave a particular way on your website. You need criteria to determine, for example, which elements on a page you should test, which external and internal factors could affect the results, and in which ways to create the designs for new phases of your testing program.

As much as testing is essential to any conversion optimization project, it should only be conducted after the completion of equally essential stages of optimization work such as persona development, site analysis, design and copy creation. Each of these elements provides a building block towards a highly optimized website that converts visitors into clients.

Find below four steps to follow in creating a successful split test. Please refer to this article to get a more detailed guide on how we come up and conduct our conversion optimization projects.

Problem identification

Before thinking about elements on the page to test, start by analyzing different problem areas on your website.

How do you do that? Several conversion optimization methodologies can help you. Invesp uses the Conversion Framework for page analysis.

The Conversion Framework analyzes seven different areas on the page:

Elements of these seven areas affect whether visitors stay on your website or leave. You must keep in mind that different elements have diverse impacts based on the type of page you are evaluating.

Using the Conversion Framework, a conversion optimization expert can easily pinpoint 50 to 150 problems on a webpage.

We do NOT believe you should attempt to fix all of these at once. Prioritize and focus on the top three to seven problems to get started.

Test hypothesis

A hypothesis is a predictive statement about the impact of removing or fixing one of the problems identified on a webpage.

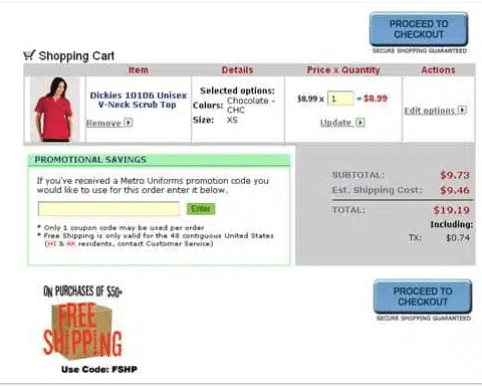

The image below shows the original design of a shopping cart for one of our clients which sells nursing uniforms. When our team examined the analytics data for the client, we noticed the high checkout abandonment rates:

Original Design of the Shopping Cart

Abandonment rates for un-optimized checkout usually range from 65% to 75%.

This client reported checkout abandonment rates close to 82%. Nothing in the checkout page explained this high rate.

Our team, then, conducted a usability test. Nurses were invited to place an order on the site while the optimization team observed and conducted exit interviews to gather information from participants. The nurses revealed that the biggest problem was the fear of paying too much for a product. As nurses are price conscious, they are aware that they can buy the same item from other competing website or brick and mortar stores.

Our client was aware of the price sensitivity issue, and that price played a significant role in deciding where visitors purchased a uniform or not. The client’s website already offered money-back guarantees and 100% price match. The problem is that these assurances were only displayed on the main homepage of the site, while most of the visitors landed on category and product pages. Visitors did not know about these assurances.

The hypothesis for this particular test: usability study revealed that visitors are sensitive to price, thus adding assurances can reduce the visitor price concerns and will reduce the cart abandonment by 20%.

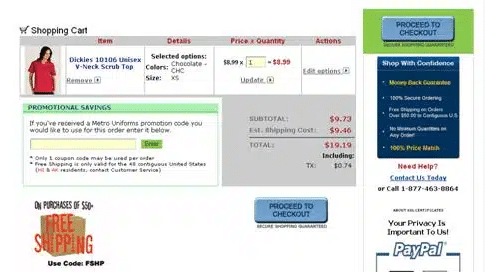

The image below shows the new design of the shopping cart.

The team added an “assurance center” on the left-hand navigation of the cart page reminding visitors of the 100% price match, and the money back guarantee.

The new version of the page resulted in a 30% reduction in shopping cart abandonment.

A hypothesis that works for one website may not succeed or, even worse, deliver negative results, for another site.

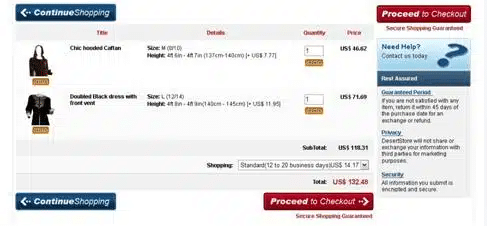

After the results of the previous client’s test were published in the Internet Retailer magazine, another client approached us to test an assurance center on their site. This client was also looking for a way to reduce their cart abandonment rate.

The image below shows the original design of the cart page:

The following image shows the new design of the cart page with the assurance center added to the left navigation:

This test had the same hypothesis as the last one, that most online visitors did not convert on the site due to the price FUD and that adding assurances on the cart page would ease the shoppers’ concerns.

When we tested the new version with the assurance center against the control, the results pointed out to an entirely different outcome. The new assurance center caused the website conversion rate to drop by 4%. So, while the assurance helped one client, it produced a negative impact with another.

Can we say with absolute certainty that adding an assurance center for the second client would always produce negative results? No.

Several elements could have influenced this particular design and caused the drop in conversion rates. The assurance center design, copy or location could have been the real reason for the drop in conversions.

Validating the hypothesis through testing and creating a follow-up hypothesis is at the heart of conversion optimization. In this case, we needed to test many different elements around the assurance center before we can decide its impact on conversions.

Tests that produce increases in conversion rates are excellent in validating initial assumptions about visitors and our hypothesis.

We do not mind tests that result in reducing conversion rates because we can learn something about our hypothesis from these tests.

We do worry about tests that do not produce any increases or decreases in conversion rates.

Create variation based on test hypothesis

Once you have the hypothesis, the next step is to start creating new page designs that will validate it.

You must be careful when you are creating new designs. Do not go overboard with creating new variations. Most split testing software allows you to create thousands if not millions of variations for a single page. You must keep in mind that validating each new variation requires a certain number of conversions.

For high converting websites, we like to limit page variations to less than seven. For smaller sites, we limit page variations to two or three new variations.

Let visitors be the judge: test the new designs

How do you judge the quality of the new designs you introduced to test your hypothesis? You let your visitors be the judge through AB or multivariate testing.

Remember the following procedures when conducting your tests:

- Select the right AB testing software to speed up the process of implementing the test. Technology should help you implement the test faster and should NOT slow you down

- Do not run your test for less than two weeks. Several factors could affect your test results, so allow the testing software to collect data long enough before concluding the test

- Do not run your test for longer than four weeks. Several external factors could pollute your test results, so try to limit the impact of these factors by limiting the test length.